Archives

- By thread 1419

-

By date

- August 2019 59

- September 2019 118

- October 2019 165

- November 2019 97

- December 2019 35

- January 2020 58

- February 2020 204

- March 2020 121

- April 2020 172

- May 2020 50

- June 2020 158

- July 2020 85

- August 2020 94

- September 2020 193

- October 2020 277

- November 2020 100

- December 2020 159

- January 2021 38

- February 2021 87

- March 2021 146

- April 2021 73

- May 2021 90

- June 2021 86

- July 2021 123

- August 2021 50

- September 2021 68

- October 2021 66

- November 2021 74

- December 2021 75

- January 2022 98

- February 2022 77

- March 2022 68

- April 2022 31

- May 2022 59

- June 2022 87

- July 2022 141

- August 2022 38

- September 2022 73

- October 2022 152

- November 2022 39

- December 2022 50

- January 2023 93

- February 2023 49

- March 2023 106

- April 2023 47

- May 2023 69

- June 2023 92

- July 2023 64

- August 2023 103

- September 2023 91

- October 2023 101

- November 2023 94

- December 2023 46

- January 2024 75

- February 2024 79

- March 2024 104

- April 2024 63

- May 2024 40

- June 2024 160

- July 2024 80

- August 2024 70

- September 2024 62

- October 2024 121

- November 2024 117

- December 2024 89

- January 2025 59

- February 2025 104

- March 2025 96

- April 2025 107

- May 2025 52

- June 2025 72

- July 2025 60

- August 2025 81

- September 2025 124

- October 2025 63

- November 2025 22

Contributors

-

Re: Odoo Experience Exhibition 2024, Visitors Data

YES INTERESTEDThanks for sharing ElizaBest regards,Michel StroomNetherlands--Office Everywhere

t: +31 6 53360677

e: mstroom@office-everywhere.com

w: Office-Everywhere.comOffice

Ramstraat 31-33

3581 HD Utrecht

On 12 Aug 2024, at 16:57, Amelia <notifications@odoo-community.org> wrote:Hope you are doing well !!

To help you, we are offering detailed visitor data collected for the exhibition

We are following up to confirm if you are interested in acquiring the Visitors/Attendees List.

Event Name : Odoo Experience Exhibition 2024

Date : 02 - 04 Oct 2024

Location : Brussels Expo - Exhibition Center, Brussels, Belgium

Counts : 4,300

If you are interested in acquiring the Exact list, we can Send you the Discounted cost and real additional details.

Each record of the list contains: Contact Name, Email Address, Company Name, URL/Website, Phone No, Title/Designation.

Exhibitors who utilized our visitor data in previous events have seen a significant increase in lead conversion rates and tailored their follow-up strategies effectively. By analyzing this data, you can better understand your audience, refine your marketing approach, and follow up with highly qualified leads.

We are looking forward to hearing from you.

If you interested to take visitors list, please reply to me has ” YES INTRESTED “

Thanks & Regards,

Eliza

Market Analyst.

_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by Michel Stroom - 10:26 - 22 Aug 2024 -

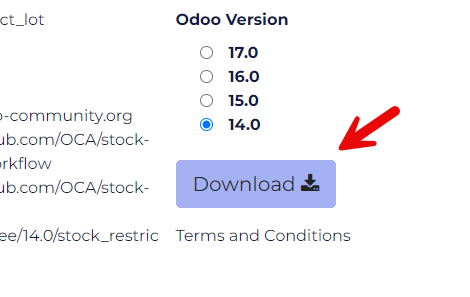

Re: odoo-community.org has a new home

Thank you very much! I don't think it's related to this migration but the download button of the shop product page doesn't seem to work (nothing gets downloaded). Not that I'm troubled by it. Just reporting.--田代祥隆 Yoshi Tashiroコタエル株式会社 / QuartileOn Thu, Aug 22, 2024 at 7:07 AM Stéphane Bidoul <notifications@odoo-community.org> wrote:Hi everyone,https://odoo-community.org has found a new home on a more powerful server.Everything should work as before, just faster. Let me know if you notice anything is off.Best regards,-Stéphane_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by Yoshi Tashiro. - 03:25 - 22 Aug 2024 -

odoo-community.org has a new home

Hi everyone,https://odoo-community.org has found a new home on a more powerful server.Everything should work as before, just faster. Let me know if you notice anything is off.Best regards,-Stéphane

by Stéphane Bidoul - 12:06 - 22 Aug 2024 -

Re: Test

Success again!Regards,Technology Services @ www.serpentcs.comBusiness Solutions @ www.serpentcs.inEnterprise Mobile Apps @ www.odooonline.comQuality Assurance @ www.odooqa.comSAP Hana @ www.prozone-tech.comPortal & DMS @ www.alfray.inOn Wed, 21 Aug 2024 at 5:28 PM, Stéphane Bidoul <notifications@odoo-community.org> wrote:And testing again from the other side.On Tue, Aug 20, 2024 at 11:12 PM Pierre Verkest <notifications@odoo-community.org> wrote:It's wirking !Le mar. 20 août 2024 à 22:43, Stéphane Bidoul <notifications@odoo-community.org> a écrit :This is a test message, preparing for the migration of the OCA Odoo instance to a new server.-sbi_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by Jay Vora - 11:41 - 21 Aug 2024 -

Re: Test

And testing again from the other side.On Tue, Aug 20, 2024 at 11:12 PM Pierre Verkest <notifications@odoo-community.org> wrote:It's wirking !Le mar. 20 août 2024 à 22:43, Stéphane Bidoul <notifications@odoo-community.org> a écrit :This is a test message, preparing for the migration of the OCA Odoo instance to a new server.-sbi_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by Stéphane Bidoul - 11:26 - 21 Aug 2024 -

Re: Large Data Files

Nice to see pandas run fast.For those for those that want run very very ... very fast consider to use polarsLe mer. 21 août 2024 à 01:57, Graeme Gellatly <notifications@odoo-community.org> a écrit :Queue job and batching can work. It is commonplace. But if it is CPU/Memory then honestly, after optimising what you can within the framework (e.g. as per Holger) a lot of the time you just get away with running a seperate worker on a separate port for long running jobs and set the limits/timeouts high. That is how a lot of people deploy cron workers these days and in older Odoo we used to have to do it to run financial reports and seemingly again now. 30,000 simple records is not so much.There may also be some db tuning you can do around WAL files, checkpoints etc if they get in the way.On Wed, Aug 21, 2024 at 9:57 AM Jerôme Dewandre <notifications@odoo-community.org> wrote:Hello,Thank you very much for your quick responses :)

Tom Blauwendraat: I am running on v16Holger Brunn: adapting the script with .with_context(tracking_disable=True) to Disable email notification divides the running time by at least 4

Goran Sunjka: It is indeed an interesting idea, I was wondering if I could store a hash of the row in Postgres to check if an existing record was updated to separate "create" and "update" action

Daniel Reis: This is indeed the problem I encountered.

Thank you all for your replies, it helps a lot :)JérômeOn Tue, Aug 20, 2024 at 7:47 PM Daniel Reis <notifications@odoo-community.org> wrote:I would expect this code to just abort for a non trivial quantity of records.

The reason why is that this is a single worker doing a single database transaction.

So the worker process will probably hit the time and CPU limits and be killed, and no records would be saved because of a transaction rollback.

And if you increase those limits a lot, you will probably cause long table locks on the database, and hurt other users and processes.

Going direct to the database can work if the data is pretty simple.

It can work but it can also be a can of worms.

One approach is to have an incremental approach to the data loading.

In the past I have used external ETL tools or scripts to do this.

Keeping it inside Odoo, one of the tools that can help is the Job Queue, possibly along with something like base_import_async:

https://github.com/OCA/queue/tree/16.0/base_import_async

Thanks

--

DANIEL REIS

MANAGING PARTNERMeet with me.

M: +351 919 991 307

E: dreis@OpenSourceIntegrators.com

A: Avenida da República 3000, Estoril Office Center, 2649-517 Cascais

On 20/08/2024 16:32, Jerôme Dewandre wrote:

Hello,

I am currently working on a syncro with a legacy system (adesoft) containing a large amount of data that must be synchronized on a daily basis (such as meetings).

It seems everything starts getting slow when I import 30.000 records with the conventional "create()" method.

I suppose the ORM might be an issue here. Potential workaround:

1. Bypass the ORM to create a record with self.env.cr.execute (but if I want to delete them I will also need a custom query)2. Bypass the ORM with stored procedures (https://www.postgresql.org/docs/current/sql-createprocedure.html)3. Increase the CPU/RAM/Worker nodes4. Some better ideas?

What would be the best way to go?

A piece of my current test (df is a pandas dataframe containing the new events):

@api.modeldef create_events_from_df(self, df):Event = self.env['event.event']events_data = []for _, row in df.iterrows():event_data = {'location': row['location'],'name': row['name'],'date_begin': row['date_begin'],'date_end': row['date_end'],}events_data.append(event_data)# Create all events in a single batchEvent.create(events_data)

Thanks in advance if you read this, and thanks again if you replied :)

Jérôme_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by David BEAL - 09:16 - 21 Aug 2024 -

Re: Large Data Files

Queue job and batching can work. It is commonplace. But if it is CPU/Memory then honestly, after optimising what you can within the framework (e.g. as per Holger) a lot of the time you just get away with running a seperate worker on a separate port for long running jobs and set the limits/timeouts high. That is how a lot of people deploy cron workers these days and in older Odoo we used to have to do it to run financial reports and seemingly again now. 30,000 simple records is not so much.There may also be some db tuning you can do around WAL files, checkpoints etc if they get in the way.On Wed, Aug 21, 2024 at 9:57 AM Jerôme Dewandre <notifications@odoo-community.org> wrote:Hello,Thank you very much for your quick responses :)

Tom Blauwendraat: I am running on v16Holger Brunn: adapting the script with .with_context(tracking_disable=True) to Disable email notification divides the running time by at least 4

Goran Sunjka: It is indeed an interesting idea, I was wondering if I could store a hash of the row in Postgres to check if an existing record was updated to separate "create" and "update" action

Daniel Reis: This is indeed the problem I encountered.

Thank you all for your replies, it helps a lot :)JérômeOn Tue, Aug 20, 2024 at 7:47 PM Daniel Reis <notifications@odoo-community.org> wrote:I would expect this code to just abort for a non trivial quantity of records.

The reason why is that this is a single worker doing a single database transaction.

So the worker process will probably hit the time and CPU limits and be killed, and no records would be saved because of a transaction rollback.

And if you increase those limits a lot, you will probably cause long table locks on the database, and hurt other users and processes.

Going direct to the database can work if the data is pretty simple.

It can work but it can also be a can of worms.

One approach is to have an incremental approach to the data loading.

In the past I have used external ETL tools or scripts to do this.

Keeping it inside Odoo, one of the tools that can help is the Job Queue, possibly along with something like base_import_async:

https://github.com/OCA/queue/tree/16.0/base_import_async

Thanks

--

DANIEL REIS

MANAGING PARTNERMeet with me.

M: +351 919 991 307

E: dreis@OpenSourceIntegrators.com

A: Avenida da República 3000, Estoril Office Center, 2649-517 Cascais

On 20/08/2024 16:32, Jerôme Dewandre wrote:

Hello,

I am currently working on a syncro with a legacy system (adesoft) containing a large amount of data that must be synchronized on a daily basis (such as meetings).

It seems everything starts getting slow when I import 30.000 records with the conventional "create()" method.

I suppose the ORM might be an issue here. Potential workaround:

1. Bypass the ORM to create a record with self.env.cr.execute (but if I want to delete them I will also need a custom query)2. Bypass the ORM with stored procedures (https://www.postgresql.org/docs/current/sql-createprocedure.html)3. Increase the CPU/RAM/Worker nodes4. Some better ideas?

What would be the best way to go?

A piece of my current test (df is a pandas dataframe containing the new events):

@api.modeldef create_events_from_df(self, df):Event = self.env['event.event']events_data = []for _, row in df.iterrows():event_data = {'location': row['location'],'name': row['name'],'date_begin': row['date_begin'],'date_end': row['date_end'],}events_data.append(event_data)# Create all events in a single batchEvent.create(events_data)

Thanks in advance if you read this, and thanks again if you replied :)

Jérôme_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by "Graeme Gellatly" <graeme@moahub.nz> - 01:56 - 21 Aug 2024 -

Re: Large Data Files

Hello,Thank you very much for your quick responses :)

Tom Blauwendraat: I am running on v16Holger Brunn: adapting the script with .with_context(tracking_disable=True) to Disable email notification divides the running time by at least 4

Goran Sunjka: It is indeed an interesting idea, I was wondering if I could store a hash of the row in Postgres to check if an existing record was updated to separate "create" and "update" action

Daniel Reis: This is indeed the problem I encountered.

Thank you all for your replies, it helps a lot :)JérômeOn Tue, Aug 20, 2024 at 7:47 PM Daniel Reis <notifications@odoo-community.org> wrote:I would expect this code to just abort for a non trivial quantity of records.

The reason why is that this is a single worker doing a single database transaction.

So the worker process will probably hit the time and CPU limits and be killed, and no records would be saved because of a transaction rollback.

And if you increase those limits a lot, you will probably cause long table locks on the database, and hurt other users and processes.

Going direct to the database can work if the data is pretty simple.

It can work but it can also be a can of worms.

One approach is to have an incremental approach to the data loading.

In the past I have used external ETL tools or scripts to do this.

Keeping it inside Odoo, one of the tools that can help is the Job Queue, possibly along with something like base_import_async:

https://github.com/OCA/queue/tree/16.0/base_import_async

Thanks

--

DANIEL REIS

MANAGING PARTNERMeet with me.

M: +351 919 991 307

E: dreis@OpenSourceIntegrators.com

A: Avenida da República 3000, Estoril Office Center, 2649-517 Cascais

On 20/08/2024 16:32, Jerôme Dewandre wrote:

Hello,

I am currently working on a syncro with a legacy system (adesoft) containing a large amount of data that must be synchronized on a daily basis (such as meetings).

It seems everything starts getting slow when I import 30.000 records with the conventional "create()" method.

I suppose the ORM might be an issue here. Potential workaround:

1. Bypass the ORM to create a record with self.env.cr.execute (but if I want to delete them I will also need a custom query)2. Bypass the ORM with stored procedures (https://www.postgresql.org/docs/current/sql-createprocedure.html)3. Increase the CPU/RAM/Worker nodes4. Some better ideas?

What would be the best way to go?

A piece of my current test (df is a pandas dataframe containing the new events):

@api.modeldef create_events_from_df(self, df):Event = self.env['event.event']events_data = []for _, row in df.iterrows():event_data = {'location': row['location'],'name': row['name'],'date_begin': row['date_begin'],'date_end': row['date_end'],}events_data.append(event_data)# Create all events in a single batchEvent.create(events_data)

Thanks in advance if you read this, and thanks again if you replied :)

Jérôme_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by "Jerôme Dewandre" <jerome.dewandre.mail@gmail.com> - 11:56 - 20 Aug 2024 -

Re: Test

It's wirking !Le mar. 20 août 2024 à 22:43, Stéphane Bidoul <notifications@odoo-community.org> a écrit :This is a test message, preparing for the migration of the OCA Odoo instance to a new server.-sbi_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by Pierre Verkest - 11:11 - 20 Aug 2024 -

Test

This is a test message, preparing for the migration of the OCA Odoo instance to a new server.-sbi

by Stéphane Bidoul - 10:41 - 20 Aug 2024 -

Re: Large Data Files

I would expect this code to just abort for a non trivial quantity of records.

The reason why is that this is a single worker doing a single database transaction.

So the worker process will probably hit the time and CPU limits and be killed, and no records would be saved because of a transaction rollback.

And if you increase those limits a lot, you will probably cause long table locks on the database, and hurt other users and processes.

Going direct to the database can work if the data is pretty simple.

It can work but it can also be a can of worms.

One approach is to have an incremental approach to the data loading.

In the past I have used external ETL tools or scripts to do this.

Keeping it inside Odoo, one of the tools that can help is the Job Queue, possibly along with something like base_import_async:

https://github.com/OCA/queue/tree/16.0/base_import_async

Thanks

--

DANIEL REIS

MANAGING PARTNERMeet with me.

M: +351 919 991 307

E: dreis@OpenSourceIntegrators.com

A: Avenida da República 3000, Estoril Office Center, 2649-517 Cascais

On 20/08/2024 16:32, Jerôme Dewandre wrote:

Hello,

I am currently working on a syncro with a legacy system (adesoft) containing a large amount of data that must be synchronized on a daily basis (such as meetings).

It seems everything starts getting slow when I import 30.000 records with the conventional "create()" method.

I suppose the ORM might be an issue here. Potential workaround:

1. Bypass the ORM to create a record with self.env.cr.execute (but if I want to delete them I will also need a custom query)2. Bypass the ORM with stored procedures (https://www.postgresql.org/docs/current/sql-createprocedure.html)3. Increase the CPU/RAM/Worker nodes4. Some better ideas?

What would be the best way to go?

A piece of my current test (df is a pandas dataframe containing the new events):

@api.modeldef create_events_from_df(self, df):Event = self.env['event.event']events_data = []for _, row in df.iterrows():event_data = {'location': row['location'],'name': row['name'],'date_begin': row['date_begin'],'date_end': row['date_end'],}events_data.append(event_data)# Create all events in a single batchEvent.create(events_data)

Thanks in advance if you read this, and thanks again if you replied :)

Jérôme_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by Daniel Reis - 07:45 - 20 Aug 2024 -

Re: Large Data Files

Hello Jerôme,

I had a similar issue some time ago with a huge nightly batch job. I also used pandas. In the end I created two dataframes and only synchronized the diff between legacy and Odoo. Pandas can process huge dataframes very fast. This reduced sync time from multiple hours to minutes.

Just sharing. Don't know if this is suitable for your problem.

BR Goran

20.08.2024 17:52:42 Tom Blauwendraat <notifications@odoo-community.org>:

There's create_multi also in some Odoo versions, but which one are you on?

On 8/20/24 17:32, Jerôme Dewandre wrote:

Hello,

I am currently working on a syncro with a legacy system (adesoft) containing a large amount of data that must be synchronized on a daily basis (such as meetings).

It seems everything starts getting slow when I import 30.000 records with the conventional "create()" method.

I suppose the ORM might be an issue here. Potential workaround:

1. Bypass the ORM to create a record with self.env.cr.execute (but if I want to delete them I will also need a custom query)2. Bypass the ORM with stored procedures (https://www.postgresql.org/docs/current/sql-createprocedure.html)3. Increase the CPU/RAM/Worker nodes4. Some better ideas?

What would be the best way to go?

A piece of my current test (df is a pandas dataframe containing the new events):

@api.modeldef create_events_from_df(self, df):Event = self.env['event.event']events_data = []for _, row in df.iterrows():event_data = {'location': row['location'],'name': row['name'],'date_begin': row['date_begin'],'date_end': row['date_end'],}events_data.append(event_data)# Create all events in a single batchEvent.create(events_data)

Thanks in advance if you read this, and thanks again if you replied :)

Jérôme_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by Goran Sunjka - 06:46 - 20 Aug 2024 -

Re: Large Data Files

> 4. Some better ideas? measure if any code running during create has non-linear behavior. As a rule of thumb, `.with_context(tracking_disable=True)` makes a world of a difference for mass creating mail.thread records, if you can miss the chatter message. Bypassing the ORM is a can of worms you should only open when absolutely necessary. -- Your partner for the hard Odoo problems https://hunki-enterprises.com

by Holger Brunn - 06:21 - 20 Aug 2024 -

Re: Large Data Files

There's create_multi also in some Odoo versions, but which one are you on?

On 8/20/24 17:32, Jerôme Dewandre wrote:

Hello,

I am currently working on a syncro with a legacy system (adesoft) containing a large amount of data that must be synchronized on a daily basis (such as meetings).

It seems everything starts getting slow when I import 30.000 records with the conventional "create()" method.

I suppose the ORM might be an issue here. Potential workaround:

1. Bypass the ORM to create a record with self.env.cr.execute (but if I want to delete them I will also need a custom query)2. Bypass the ORM with stored procedures (https://www.postgresql.org/docs/current/sql-createprocedure.html)3. Increase the CPU/RAM/Worker nodes4. Some better ideas?

What would be the best way to go?

A piece of my current test (df is a pandas dataframe containing the new events):

@api.modeldef create_events_from_df(self, df):Event = self.env['event.event']events_data = []for _, row in df.iterrows():event_data = {'location': row['location'],'name': row['name'],'date_begin': row['date_begin'],'date_end': row['date_end'],}events_data.append(event_data)# Create all events in a single batchEvent.create(events_data)

Thanks in advance if you read this, and thanks again if you replied :)

Jérôme_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by Tom Blauwendraat - 05:51 - 20 Aug 2024 -

Large Data Files

Hello,I am currently working on a syncro with a legacy system (adesoft) containing a large amount of data that must be synchronized on a daily basis (such as meetings).It seems everything starts getting slow when I import 30.000 records with the conventional "create()" method.I suppose the ORM might be an issue here. Potential workaround:1. Bypass the ORM to create a record with self.env.cr.execute (but if I want to delete them I will also need a custom query)2. Bypass the ORM with stored procedures (https://www.postgresql.org/docs/current/sql-createprocedure.html)3. Increase the CPU/RAM/Worker nodes4. Some better ideas?What would be the best way to go?A piece of my current test (df is a pandas dataframe containing the new events):@api.modeldef create_events_from_df(self, df):Event = self.env['event.event']events_data = []for _, row in df.iterrows():event_data = {'location': row['location'],'name': row['name'],'date_begin': row['date_begin'],'date_end': row['date_end'],}events_data.append(event_data)# Create all events in a single batchEvent.create(events_data)Thanks in advance if you read this, and thanks again if you replied :)Jérôme

by "Jerôme Dewandre" <jerome.dewandre.mail@gmail.com> - 05:31 - 20 Aug 2024 -

Re: Visitors Database of Odoo Website Day 2024

Hello everyone,We are trying to find a way to stop receiving this kind of spam on the OCA Contributors Mailing List.Please, don't answer to this person by answering to its first email, this spams everyone in the mailing list.Thanks!Le ven. 16 août 2024 à 13:57, DIALLO Innah <notifications@odoo-community.org> a écrit :

Hello,

Thank you so much, but actually i am not interested, thank you very much.

Have a nice day.Le jeu. 15 août 2024 à 13:02, Ivy.Rodriguez <notifications@odoo-community.org> a écrit :Hi,

Hope all is well with you!

We're offering a special deal: discounted cost and extra information.

We're following up to see if you're interested in getting the Visitors/Attendees List.

Event Details:

Event Name: Odoo Website Day 2024

Date:03 Oct 2024

Location: Brussels Exhibition Centre at the Brussels Expo, Brussels, Belgium

Visitors/Attendees:5,000

Each record contains: Contact Name, Email Address, Company Name, URL/Website, Phone No, Title/Designation.

Could you let us know if you're interested? We'll then send you the discounted cost and more details.

Waiting for your positive response.

Thanks and Regards

Ivy Rodriguez_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by Virginie Dewulf - 02:30 - 16 Aug 2024 -

Re: Visitors Database of Odoo Website Day 2024

Hello,

Thank you so much, but actually i am not interested, thank you very much.

Have a nice day.Le jeu. 15 août 2024 à 13:02, Ivy.Rodriguez <notifications@odoo-community.org> a écrit :Hi,

Hope all is well with you!

We're offering a special deal: discounted cost and extra information.

We're following up to see if you're interested in getting the Visitors/Attendees List.

Event Details:

Event Name: Odoo Website Day 2024

Date:03 Oct 2024

Location: Brussels Exhibition Centre at the Brussels Expo, Brussels, Belgium

Visitors/Attendees:5,000

Each record contains: Contact Name, Email Address, Company Name, URL/Website, Phone No, Title/Designation.

Could you let us know if you're interested? We'll then send you the discounted cost and more details.

Waiting for your positive response.

Thanks and Regards

Ivy Rodriguez_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by hawa1 - 01:54 - 16 Aug 2024 -

Re: Visitors Database of Odoo Website Day 2024

Hello,

No, I am not interested, thank you very much.

Have a nice day.

El 15/08/2024 a las 15:02, Ivy.Rodriguez escribió:

Hi,

Hope all is well with you!

We're offering a special deal: discounted cost and extra information.

We're following up to see if you're interested in getting the Visitors/Attendees List.

Event Details:

Event Name: Odoo Website Day 2024

Date:03 Oct 2024

Location: Brussels Exhibition Centre at the Brussels Expo, Brussels, Belgium

Visitors/Attendees:5,000

Each record contains: Contact Name, Email Address, Company Name, URL/Website, Phone No, Title/Designation.

Could you let us know if you're interested? We'll then send you the discounted cost and more details.

Waiting for your positive response.

Thanks and Regards

Ivy Rodriguez_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by Fernando - 01:00 - 16 Aug 2024 -

Re: Visitors Database of Odoo Website Day 2024

How much ?

On Thu, 15 Aug 2024, 20:02 Ivy.Rodriguez, <notifications@odoo-community.org> wrote:Hi,

Hope all is well with you!

We're offering a special deal: discounted cost and extra information.

We're following up to see if you're interested in getting the Visitors/Attendees List.

Event Details:

Event Name: Odoo Website Day 2024

Date:03 Oct 2024

Location: Brussels Exhibition Centre at the Brussels Expo, Brussels, Belgium

Visitors/Attendees:5,000

Each record contains: Contact Name, Email Address, Company Name, URL/Website, Phone No, Title/Designation.

Could you let us know if you're interested? We'll then send you the discounted cost and more details.

Waiting for your positive response.

Thanks and Regards

Ivy Rodriguez_______________________________________________

Mailing-List: https://odoo-community.org/groups/contributors-15

Post to: mailto:contributors@odoo-community.org

Unsubscribe: https://odoo-community.org/groups?unsubscribe

by milori2006 - 04:45 - 15 Aug 2024 -

Visitors Database of Odoo Website Day 2024

Hi,

Hope all is well with you!

We're offering a special deal: discounted cost and extra information.

We're following up to see if you're interested in getting the Visitors/Attendees List.

Event Details:

Event Name: Odoo Website Day 2024

Date:03 Oct 2024

Location: Brussels Exhibition Centre at the Brussels Expo, Brussels, Belgium

Visitors/Attendees:5,000

Each record contains: Contact Name, Email Address, Company Name, URL/Website, Phone No, Title/Designation.

Could you let us know if you're interested? We'll then send you the discounted cost and more details.

Waiting for your positive response.

Thanks and Regards

Ivy Rodriguez

by Ivy.Rodriguez@galaxileadshq.com - 03:01 - 15 Aug 2024

![[Logo OpenSourceIntegrators.com]](https://www.opensourceintegrators.com/images/opensource-logo-2022.svg)